Microsoft-Cloud-Native

Microsoft Azure OpenAI

Azure OpenAI Service provides REST API access to OpenAI’s powerful language models including the GPT-4, GPT-35-Turbo, and Embeddings model series.

These models can be easily adapted to your specific task including but not limited to:

- content generation

- summarization

-

semantic search

- natural language to code translation

Users can access the service through REST APIs, Python SDK, or our web-based interface in the Azure OpenAI Studio.

General Documentation on Microsoft Learn

Overview

Grounding LLM

Grounding is the process of using large language models (LLMs) with information that is use-case specific, relevant, and not available as part of the LLM’s trained knowledge. It is crucial for ensuring the quality, accuracy, and relevance of the generated output. While LLMs come with a vast amount of knowledge already, this knowledge is limited and not tailored to specific use-cases. To obtain accurate and relevant output, we must provide LLMs with the necessary information. In other words, we need to “ground” the models in the context of our specific use-case.

Private ChatGPT

First, I strongly recommand to start with this introduction post: How to create a private ChatGPT with your own data

Chat Copilot Sample Application - This sample allows you to build your own integrated large language model (LLM) chat copilot. The sample is built on Microsoft Semantic Kernel.

Architecture

High Level Architecture

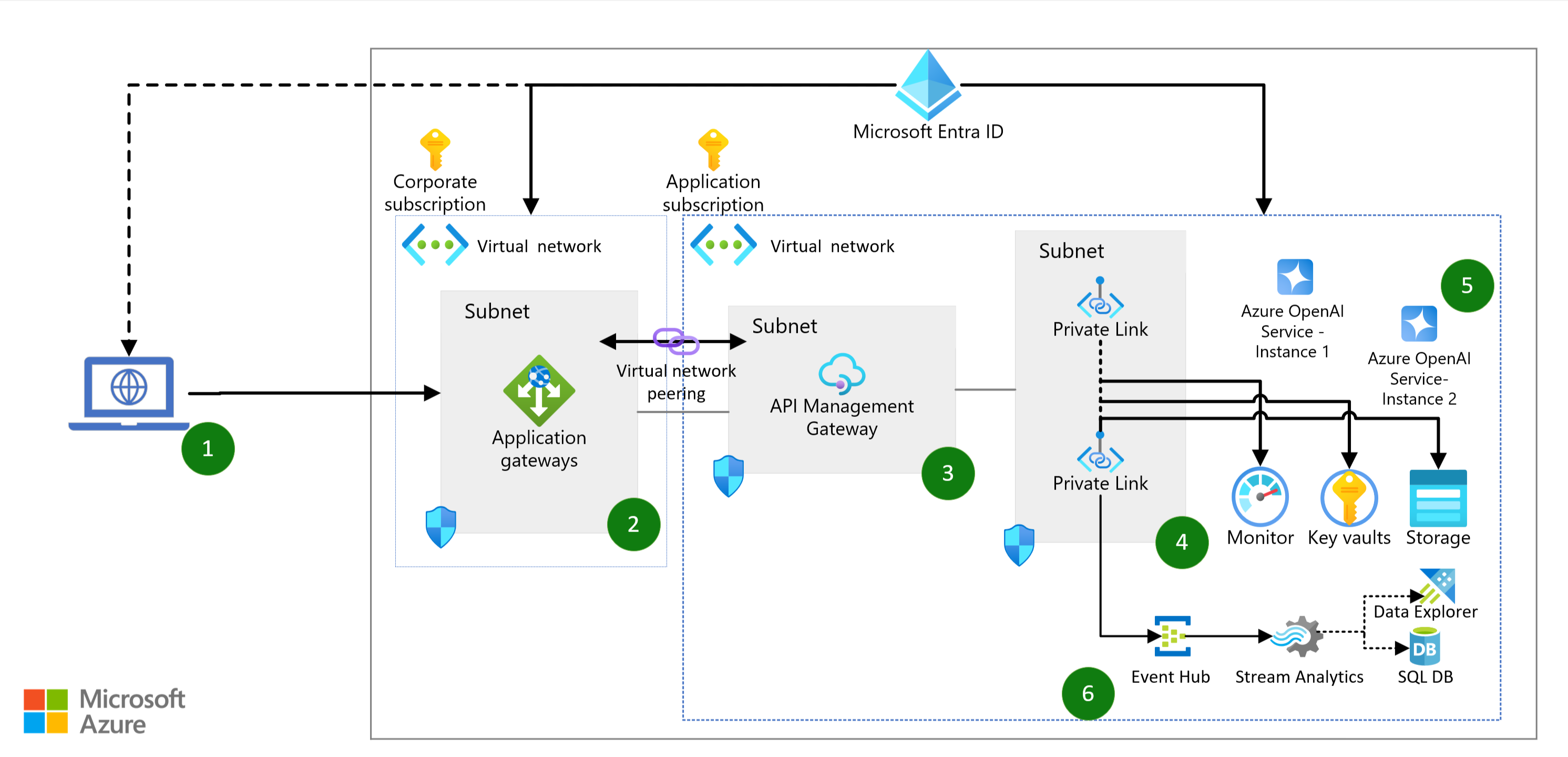

Building a Private ChatGPT Interface With Azure OpenAI

Enterprise Implementation

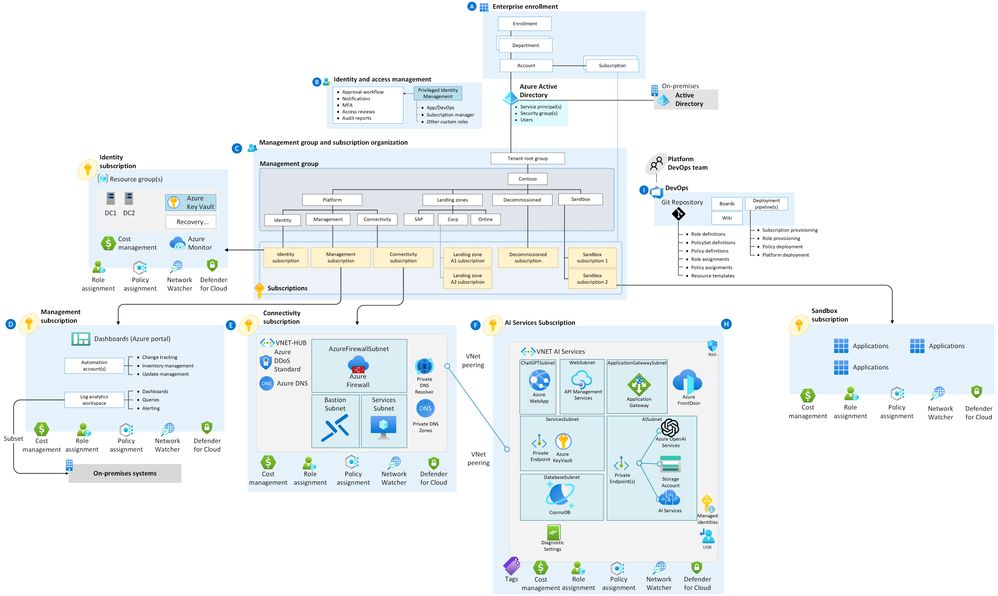

Azure OpenAI Landing Zone reference architecture

GitHub Repository with implementation

Implement logging and monitoring for Azure OpenAI models

Enterprise Deployment with Azure API Management

- Azure API Management with Azure OpenAI

- Azure OpenAI Service Load Balancing with Azure API Management

- Manage Azure Open AI using APIM

Tutorial, Walkthrough

- Generative AI - 18 Lessons, Get Started Building with Generative AI

- Introduction to Azure OpenAI Service - 1hr3min

- Develop Generative AI solutions with Azure OpenAI Service - 5hr34min

- Azure Open AI Blueprint – Navigating from MVP to Enterprise Environments

- Intelligent App Workshop for Microsoft Copilot Stack

Demos

Optimization

Aditional Resources

- Azure Open Ai Models: Azure OpenAI Service models

- Azure AI News: Azure AI Techcommunity Blog

- Azure Open AI: Azure OpenAI Service - Pricing

- Quota & Limits: Azure OpenAI Service quotas and limits